The hidden culprit behind bad data

Thomas Redman, known as “the Data Doc”, famously noted that 50 years after “garbage in, garbage out” was coined, we still struggle with data quality. The main reason? Because we’ve tried to solve it with tech fixes instead of addressing underlying people issues. And isn’t that a story all too familiar?

He argues: “The solution is not better technology: it’s better communication between data creators and data users and a shift in responsibility for data quality away from IT into the hands of managers”.

Gartner follows the same thinking pattern by stating that data quality must be treated as a business discipline owned by the whole organisation.

Business users are most often the ones creating and using data, so their habits and understanding directly impact quality. In practice, most data quality issues start at the point of entry or handling by humans.

The consequences of a business user mis-typing a record, a sales rep not following standards in the CRM, or analysts working in silos all result in process breakdown, leading to “bad data.” The biggest problem is a disconnect between the data users and the data producers and how data is produced versus how it is consumed.

In this article, we’ll explore the cost of poor data quality and how culture and accountability are the keys to solving the problem.

What is good data quality?

Before we look at the causes and solutions for bad data quality, let's identify what good data looks like.

High-quality data is accurate, complete, consistent, timely, and unique.

It's complete, meaning all essential details are available without gaps that could skew analysis.

It's consistent, ensue same data is represented uniformly across systems, preventing conflicts in reporting.

It's timely, meaning data is up-to-date and accessible at the moment it’s needed, preventing outdated insights from driving business strategies.

And finally, it's unique. It ensures that records are not duplicated, preventing inflated counts, redundant processing, and misleading conclusions.

What’s the cost of poor data quality in organisations?

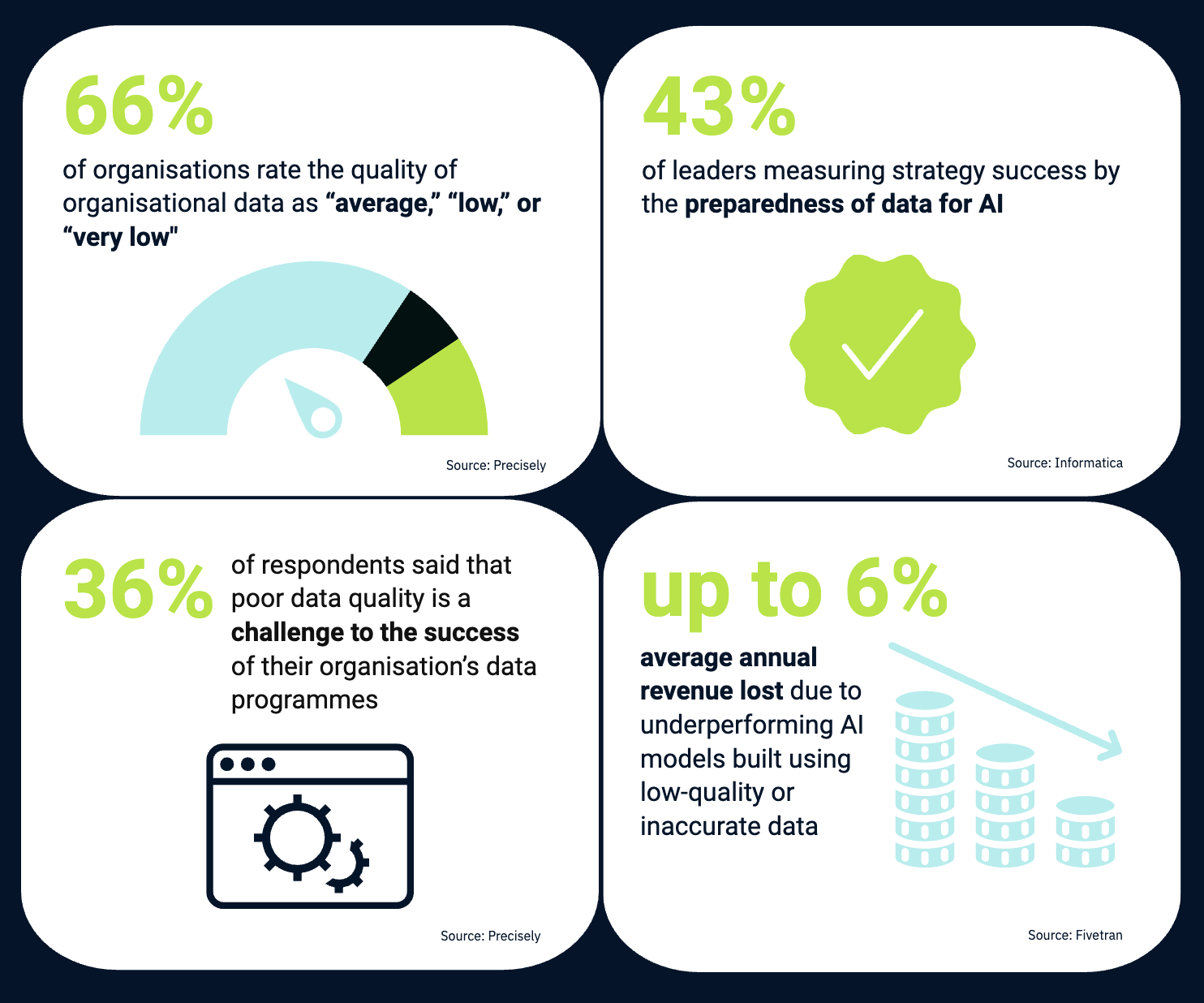

It’s not the newest stat; however, in 2016, IBM conducted substantial research into the cost of poor data quality in the US. They landed on a staggering figure of $3.1 trillion in annual losses. A decade later, we’re dealing with more system complexity and data than ever. So, following that logic, the losses would have increased, too.

But let’s dig deeper. In 2019, Harvard Business Review shared that knowledge workers waste up to 50% of their time hunting for data, identifying and correcting errors, and seeking confirmatory sources for data they do not trust. How does that translate into a labour cost? Let's imagine an organisation that has 100 analysts. Essentially, 50 of them are doing nothing but “data janitor” work on average. If you value an analyst at £65k/year, that’s over £3 million in productivity lost right there.

Another key cost is lost trust and adoption. When data is unreliable, managers and frontline employees lose faith in reports and revert to gut decisions. The result is suboptimal decisions and under-utilisation of expensive analytics systems. A survey by HFS Research found that 89% of executives agree high data quality is critical, yet 75% of them don’t trust their data fully.

It’s a startling disconnect. Companies are spending billions on BI and AI tools, but three-quarters of leaders lack confidence in the output, largely due to data quality concerns. Another survey reported that 99% of business leaders have concerns about their internal data.

Low trust directly undermines BI/AI ROI. Organisations might achieve only a fraction of the potential benefit of analytics if users don’t act on the insights. Indeed, Forbes reports that many organisations have slow adoption of analytics and BI tools because of a lack of trust in the data, making data trust a key hurdle to realising value from these investments.

Error-induced costs are also notable. We saw in healthcare how one type of error (duplicate patient records) costs an average hospital up to $1.2M/year in denied claims. In finance, a well-known example was a major bank that accidentally overpaid nearly $900 million to creditors due to a spreadsheet data error, a costly mistake tied directly to data quality and human process (although much of that was recovered, it led to litigation and reputational damage). While that’s extreme, it underscores that errors in financial data can cost companies millions in one blow.

Finally, opportunity cost is huge but often hidden. If your marketing data is messy, you might be missing chances to target customers effectively, leading to unrealised revenue. If your supply chain data is inaccurate, you might lose sales due to stockouts or tie up cash in excess inventory.

These “soft” costs can sometimes dwarf the hard costs. For instance, IBM found that in some organisations, up to 15% of revenue is lost through bad data in the form of missed opportunities or inefficient processes.

That is why framing data quality as a value driver is important, not just a cost avoidance. When persuading other executives, CDAOs should frame data quality initiatives in the language of business value and risk. And they need to help execs see that data quality goes beyond tools and governance, but that without human ownership, it fails.

The human factors behind poor data quality

Let’s go back to the biggest root cause of poor data quality and dig a bit deeper. Of course, we’re talking about human behaviour.

Let’s examine what the main challenges are.

Until it’s too late, nobody feels the responsibility of data

Ownership sits at the heart of managing data quality in every department. And that all starts with accountability. However, people need to be educated that maintaining data integrity is part of their role. One employee making one inaccuracy doesn’t see the big picture of this happening potentially thousands of times. When those all enter the data flow, the consequences can be dire.

That’s why it’s crucial to provide data ownership and stewardship education to give everyone the awareness that maintaining data accuracy is a vital part of their work.

A fractured data landscape starts with siloed teams

While limited access and the lack of a single version of the truth is a key challenge of siloes, data quality also suffers. Inconsistencies arise when different teams are doing things in their own way, and reconciling data with variability in naming conventions or structure causes a massive headache, undermining trust in your organisation’s data as a whole.

According to McKinsey’s findings, breaking down data silos results in a 15-25% increase in operation efficiency. But, this requires executives and management to align on incentives to do so and create a shared language. And they have to tell the story as to “why” and how the effort will benefit everyone. This will help break down the “my department, my data” mentality.

Cognitive Bias: The invisible threat to data-driven decisions

Every person has inherent biases. The key is to become aware of them and understand how they impact our perceptions and actions. Whether it’s confirmation bias, selection bias or flawed insights, we’re all prone and vulnerable to them. But the real problem is not knowing you are, and having a false sense of confidence that the data you are presented with or are entering is unbiased. It’s easy to seek data that confirms your own interpretation, which again can have detrimental implications.

Bias-awareness training can help people recognise and mitigate their biases while allowing them to test assumptions in scenarios that won’t impact real decisions.

While improving data quality should increase your organisation’s trust in its data, skepticism should never be thrown out the window. Validation of sources remains an important element of critical thinking, and questioning data should always be welcomed to help drive further improvements.

How to solve the people problem in data quality

By now, it’s obvious that even the most sophisticated technology won't fix your quality issues without addressing the human element. So, let’s move on to solutions that enable good data quality to become the default within your organisation.

1. Create a data-driven culture with a vocal C-Suite

Quality initiatives must be driven from leadership. Your C-level champions should regularly communicate that data is a strategic asset and quality is everyone's responsibility. This means starting meetings by reviewing data metrics, calling out when poor data hinders progress, and tying data quality to business outcomes. Leaders need to consistently reinforce that better data leads to better decisions. And when you add data literacy as a part of performance metrics, you’ll be sure to get people engaged more quickly if they can get rewarded.

2. Manage change effectively

Improving data quality often requires changing how people work. Communicate clearly why new processes matter, connecting them to business outcomes people care about. Identify and showcase "quick wins" to build momentum. For example, after implementing a new data entry standard, highlight how it reduced duplicates and made reporting faster. Share success stories through internal channels to turn skeptics into believers.

3. Establish clear ownership and accountability

According to Gartner, a lack of clear ownership is a top obstacle to quality improvement. By 2025, having a Chief Data Officer is now becoming standard, but equally important is distributed stewardship throughout the organisation.

- Assign specific data ownership roles across your organisation. Bring people into the fold by educating them on what it means to become a data owner or steward.

- Designate business managers as data owners for major domains like Customer or Finance data, making them accountable for quality in their area. Appoint data stewards to handle day-to-day quality tasks and bridge business and data and IT teams.

- Set up clear agreements or playbooks to enable teams to understand how to collect, update and validate their team’s data.

- Set up real-time validation rules to ensure accuracy before it’s allowed into the system.

At Data Literacy Academy, we provide Data Ownership and Stewardship certifications to help you achieve this at scale.

4. Implement a robust Data Literacy programme

In 2025, forward-thinking organisations are running formal data literacy training that covers how to interpret metrics, use analytical tools, and understand data governance responsibilities. Appoint data literacy champions in each department. These are power users who help colleagues with data questions. Consider gamifying data literacy through internal competitions or dedicated "data days" where teams solve problems using data.

Crucially, literacy efforts must also address trust. Share quality metrics transparently, showing "here's how we're improving, and here's what still needs work" to invite broader participation in quality efforts.

5. Align incentives with quality goals

Incorporate data quality KPIs into business unit scorecards. If Sales maintains CRM data, measure the completeness of their entries and make it part of their performance evaluation. Recognise teams that achieve significant improvements with awards or other incentives. On the accountability side, address repeated bypassing of data protocols just as you would any process violation: with appropriate consequences.

6. Focus on critical data and visible pain points

Don't try to solve everything at once. Identify the data elements most critical to your operations or decision-making and implement tighter controls around those. Talk to business users about their most painful data issues and tackle those first. These visible wins build support for broader governance.

7. Make quality proactive, not reactive

Shift from treating data cleansing as a periodic activity to embedding quality in daily processes. Implement validation at data entry points with front-end checks and automated rules. By catching errors at the source, you'll prevent them from propagating downstream, where fixing them becomes far more expensive.

8. Set up continuous monitoring and swift remediation

Treat data pipelines as living processes that need monitoring and fast incident response. Implement automated alerts for data anomalies and develop a playbook for handling quality issues when they arise. The goal is to catch and resolve problems before they affect end users or reports, maintaining trust in your data.

By balancing governance, culture, and technology, you can transform data quality from a data or IT team problem into an organisational advantage, one where quality becomes the expected default, not the exception.

The ROI of fixing the people problem in data quality

Now that you understand how to solve your data quality problems, let’s take a look at what happens when you do. Framing clear ROI will help you secure budgets for any initiative you wish to run.

The returns come in several flavours:

- Cost savings: efficiency and error reduction

- Revenue growth: better decisions, customer experiences, and new opportunities

- Risk mitigation: avoiding fines and failures

1. Cost savings

The operational savings from quality improvements can be substantial. Whether its reducing data reconciliation time, standardising data across systems and eliminating duplication: the hours of manual labour or the cost of using tools to do the work for you result in one that can be cut if the root causes are fixed.

Consider quantifying your "data downtime" costs. If your data team spends 80 hours monthly fixing quality issues at an average cost of £400/hour, that's nearly £400,000 annually in just remediation labour. One UK telecommunications provider calculated that by reducing their data downtime from 300 to 25 hours monthly through better governance and monitoring, they achieved a 3.2× ROI on their data quality investment within the first year.

2. Revenue growth

Quality data directly enables revenue-generating initiatives. An example would be how cleaning and enriching their loyalty programme data will deliver better targeting and increased basket sizes through more targeted promotions. When data quality is high, data is trusted and time isn’t wasted on looking for it or verifying it. This efficiency can aid with customer service, resulting in customers churning less, buying more and making recommendations to their community to become a client too.

Link specific data quality metrics to business KPIs. One example can be: "By improving our product data accuracy from 85% to 98%, we expect to reduce product returns by 12%. With returns currently costing us £4M annually, that's a £480,000 saving—far exceeding our £175,000 quality programme investment."

3. Risk Reduction

The Financial Conduct Authority (FCA) has issued significant fines for data-related compliance failures. Rather than waiting for penalties, forward-thinking UK organisations are proactively investing in data quality. A London-based insurance company invested £800,000 in data governance and quality controls, avoiding potential regulatory fines while simultaneously improving their underwriting accuracy by 8%.

For manufacturers, preventing recalls due to data errors represents substantial savings. A British automotive parts supplier implemented rigorous data quality checks in their product specifications database, preventing a potential recall that would have cost upwards of £5M in warranty claims and brand damage.

To summarize ROI in simpler terms: every dollar invested in data quality returns several dollars in value.

Kevin Campbell of Syniti put it simply: “The cost of bad data is staggering. After thousands of projects, we know that almost 90% of the time when a business reports issues like inventory problems or supply chain issues] it’s caused by a data problem.”

Fix the data problem and you fix the business problem. He implies that solving data issues has a multiplier effect on business performance. Some organisations have quantified an ROI of 5x or more on data quality programmes.

Conclusion

With the rise of AI, data quality is now front and center as a priority. The bad news is, many organisations are still at various stages of their data maturity journey and trust isn’t particularly high. However, there’s a clear imperative to implement data quality programmes now, as it will form the foundation of future use, Gen-AI readiness and ROI of data for every business

Unlock the power of your data

Speak with us to learn how you can embed org-wide data literacy today.

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)